Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

Style variation

-

Congratulations TugboatEng on being selected by the Eng-Tips community for having the most helpful posts in the forums last week. Way to Go!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

US units to SI units 2

- Thread starter espus81

- Start date

- Status

- Not open for further replies.

Both those were long ago deprecated in favor of the current definition.

The current definitions of all SI base units require no physical artifacts, which degrade through handling and measurement, and can be tested and replicated in any sufficiently equipped laboratory in the world. The lone holdout was the kilogram, which was not converted from artifact-based standard to a pure measurement until last year.

TTFN (ta ta for now)

I can do absolutely anything. I'm an expert! faq731-376 forum1529 Entire Forum list

The current definitions of all SI base units require no physical artifacts, which degrade through handling and measurement, and can be tested and replicated in any sufficiently equipped laboratory in the world. The lone holdout was the kilogram, which was not converted from artifact-based standard to a pure measurement until last year.

TTFN (ta ta for now)

I can do absolutely anything. I'm an expert! faq731-376 forum1529 Entire Forum list

btrueblood

Mechanical

The article on the "metre" in wikipedia has an interesting discussion of the history of standards, and the increased precision of the standard as time went on, see the table under the "Timeline" heading:

1795 - defined as one ten millionth of distance from pole to equator - relative uncertainty ~ 10-4

1799 - platinum bar - rel. uncertainty ~ 10-5

1889 - Pt/Ir bar - rel. uncertainty ~ 10-7

1960 - 1650763.73 wavelengths of light from a specified transition in krypton-86 - rel. uncertainty ~ 4x10-9

1983 - Length of the path travelled by light in a vacuum in 1/299,792.458 second - rel. uncertainty ~ 10-10

One could argue that past 1799 most of us would probably not care too much, unless dealing with fairly high precision machinery or electronic devices. Then again, this computer I'm typing on, and my phone, and other electronics, would probably not be possible without the dimensional precision implied by at least the 1960 standard. What the list really says is that the increasing ability to measure time to very high precision/repeatability means that you can improve other measurements by tying them to a time-based standard, so why not?

1795 - defined as one ten millionth of distance from pole to equator - relative uncertainty ~ 10-4

1799 - platinum bar - rel. uncertainty ~ 10-5

1889 - Pt/Ir bar - rel. uncertainty ~ 10-7

1960 - 1650763.73 wavelengths of light from a specified transition in krypton-86 - rel. uncertainty ~ 4x10-9

1983 - Length of the path travelled by light in a vacuum in 1/299,792.458 second - rel. uncertainty ~ 10-10

One could argue that past 1799 most of us would probably not care too much, unless dealing with fairly high precision machinery or electronic devices. Then again, this computer I'm typing on, and my phone, and other electronics, would probably not be possible without the dimensional precision implied by at least the 1960 standard. What the list really says is that the increasing ability to measure time to very high precision/repeatability means that you can improve other measurements by tying them to a time-based standard, so why not?

Note that the newton is not a basic unit, and includes kg, so it's a circular definition.

The kilogram's issue is that not so much a "why not?" it was that the "standard" keeps changing every time it's been measured, so no one is even sure whether the standard is still valid.

TTFN (ta ta for now)

I can do absolutely anything. I'm an expert! faq731-376 forum1529 Entire Forum list

The kilogram's issue is that not so much a "why not?" it was that the "standard" keeps changing every time it's been measured, so no one is even sure whether the standard is still valid.

TTFN (ta ta for now)

I can do absolutely anything. I'm an expert! faq731-376 forum1529 Entire Forum list

In fact, the biggest hangup with the kilogram's new definition had been that the physical constants contemplated weren't immutable either, hence the new definition requires that h be defined explicitly as 6.62607015*10^-34 * m^2 / s

TTFN (ta ta for now)

I can do absolutely anything. I'm an expert! faq731-376 forum1529 Entire Forum list

TTFN (ta ta for now)

I can do absolutely anything. I'm an expert! faq731-376 forum1529 Entire Forum list

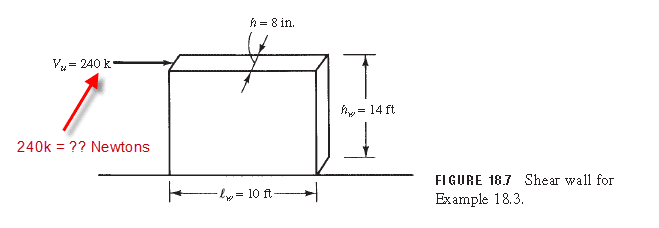

Design of Reinforced Concrete by Jack McCormac.

The text of example 18.3 cited by OP already shows the conversion: 315,488 lb = 315.5 k > 240 k

TTFN (ta ta for now)

I can do absolutely anything. I'm an expert! faq731-376 forum1529 Entire Forum list

The text of example 18.3 cited by OP already shows the conversion: 315,488 lb = 315.5 k > 240 k

TTFN (ta ta for now)

I can do absolutely anything. I'm an expert! faq731-376 forum1529 Entire Forum list

Cal91 (and some others).

For a good explanation of "base units" see

They are applied to the seven base "physical quantities" in terms of which all other physical quantities can be defined.

We could measure any or all of these base physical quantities in any arbitrarily chosen units.[ ] Furlongs for length, carats for mass, etc.[ ] Our physical laws could still be expressed mathematically, but would require the inclusion of arbitrary constants.[ ] By choosing our "base units" cleverly we can arrange things so that many of these arbitrary constants are unity.[ ] This is what a good "system of units" will attempt to achieve.

For an example, consider Newton's Second Law.[ ] As originally formulated by Sir Isaac, this stated that "the rate of change of momentum of a body is directly proportional to the force applied", which (for constant mass) readily converts to F[ ]=[ ]k.m.a where k is a constant of proportionality.[ ] Choose your base units cannily and this "simplifies" to the now familiar F=ma.

For a good explanation of "base units" see

They are applied to the seven base "physical quantities" in terms of which all other physical quantities can be defined.

We could measure any or all of these base physical quantities in any arbitrarily chosen units.[ ] Furlongs for length, carats for mass, etc.[ ] Our physical laws could still be expressed mathematically, but would require the inclusion of arbitrary constants.[ ] By choosing our "base units" cleverly we can arrange things so that many of these arbitrary constants are unity.[ ] This is what a good "system of units" will attempt to achieve.

For an example, consider Newton's Second Law.[ ] As originally formulated by Sir Isaac, this stated that "the rate of change of momentum of a body is directly proportional to the force applied", which (for constant mass) readily converts to F[ ]=[ ]k.m.a where k is a constant of proportionality.[ ] Choose your base units cannily and this "simplifies" to the now familiar F=ma.

- Status

- Not open for further replies.

Similar threads

- Replies

- 46

- Views

- 12K

- Question

- Replies

- 2

- Views

- 3K

- Locked

- Question

- Replies

- 3

- Views

- 528

- Locked

- Question

- Replies

- 0

- Views

- 207

- Replies

- 12

- Views

- 575