Thanks for your answers.

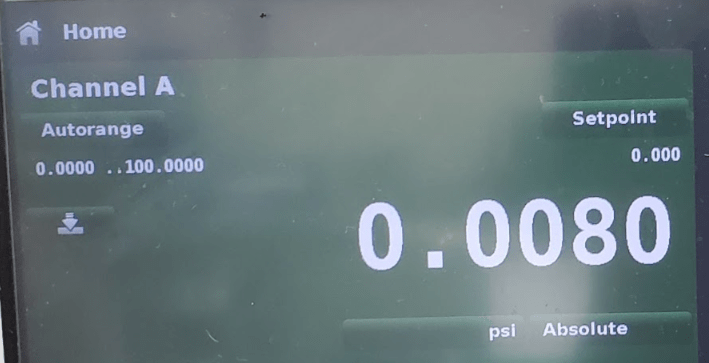

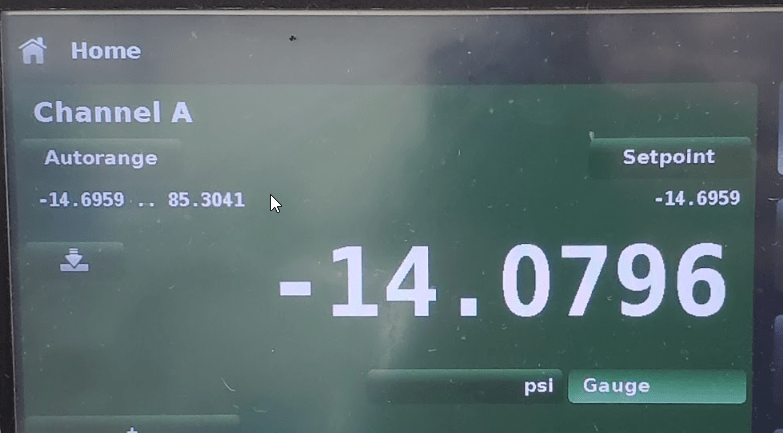

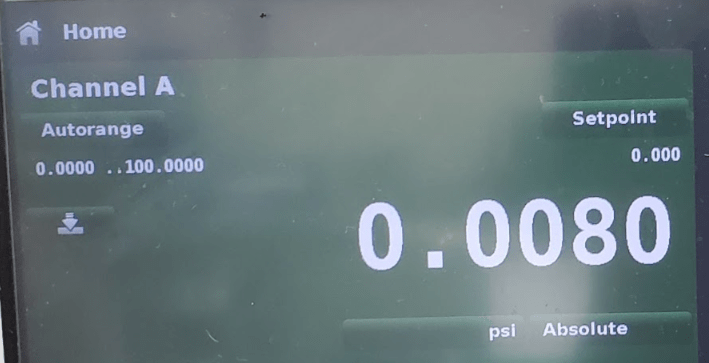

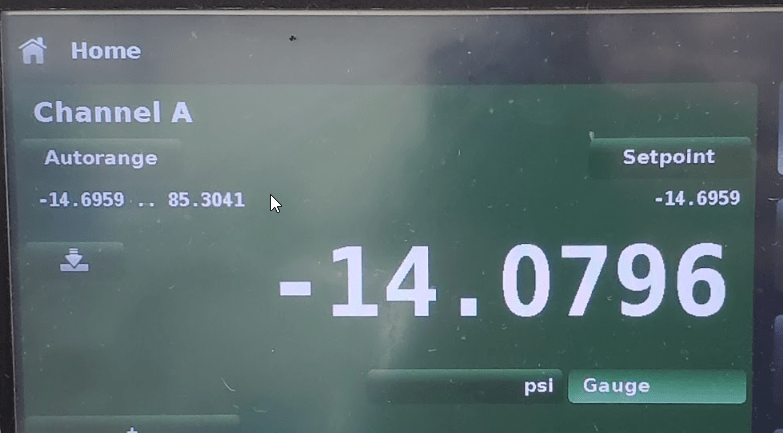

To address rb1957's question ("So the two screen shots are not the same test case?"): Actually, the two screenshots are exactly the same test case. I just pressed the "Pressure Type button" to toggle between absolute and gauge pressure modes on the pressure controller, with the same setpoint. I started at PSIA, with a setpoint of 0 PSIA. The 0 PSIA setpoint was replaced by the -14.6959 PSIG setpoint, populated automatically by the controller, which is exactly equal to negative 1 atmosphere. Both screen shots are taken within moments of each other, with no change to the setpoint or unit under test.

I didn't grab the barometric pressure at the moment I took the screenshots. Roughly an hour later it is 29.74 inHg or 14.6069 PSI as reported by localconditions.com. Turns out it is already accounted for (see last paragraph).

Most of you already know the following, but I'll state it anyhow: PSI Gauge is a relative measurement. The gauge applied pressure value (ie. the vacuum by way of the pressure controller in this case) is referenced to the existing room pressure at that moment in time. The vacuum pump has the capability to pull a certain amount of negative pressure (vacuum) relative to room pressure (it's reference). Absolute pressure on the other hand is referenced to an absolute vacuum. Nobody can pull an absolute/perfect vacuum, so the reference sensors that are used in these types of controllers are adjusted by interpolation to the amount of vacuum that can be achieved by their equipment. My particular vacuum pump (Leybold) can pull to <0.002 mbar (<0.0290 PSI).

Having stated the obvious, here's my confusion: If my pump can pull within 0.029 PSI of an ideal vacuum, since I started in absolute mode, I would have expected that, in a system with zero leaks, I could pull to 0.029 PSIA. Actually the pump performed even better and pulled to 0.008 PSIA. When switching to gauge pressure, I would have expected that I would see -14.6959 + 0.029 = -14.667 PSIG with respect to room pressure.

So I DID find out since my original post that the controller was in "gauge emulation mode" (indicated by the lighter background behind "gauge"). The manual states: "In the gauge emulation mode the atmospheric pressure reading from the barometric reference transducer is subtracted from the absolute pressure reading of the channel to emulate a gauge pressure". That calculation is then 0.080-14.6069 = -14.5269. But instead, it displays -14.0796 per the screenshot, not even close. The logic behind this still eludes me.