San Francisco Chronicle

As noted in the article, this was inevitable. We do not yet know the cause. It raises questions.

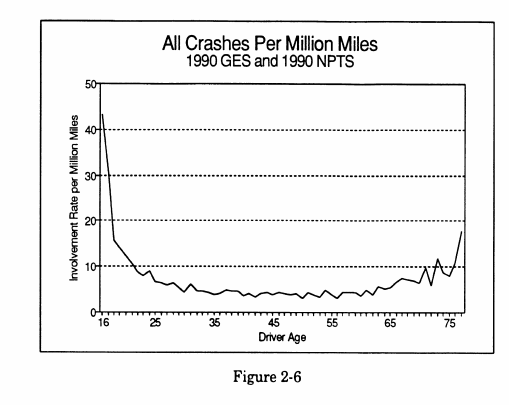

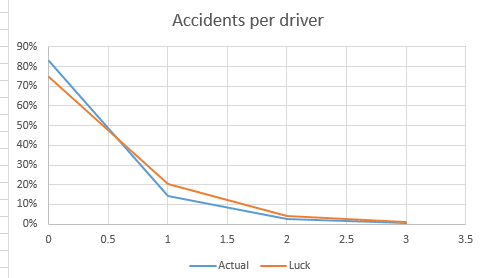

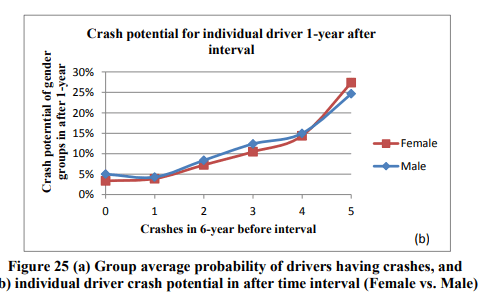

It is claimed that 95% of accidents are caused by driver error. Are accidents spread fairly evenly across the driver community, or are a few drivers responsible for most accidents? If the latter is true, it creates the possibility that there is a large group of human drivers who are better than a robot can ever be. If you see a pedestrian or cyclist moving erratically along the side of your road, do you slow to pass them? I am very cautious when I pass a stopped bus because I cannot see what is going on in front. We can see patterns, and anticipate outcomes.

Are we all going to have to be taught how to behave when approached by a robot car. Bright clothing at night helps human drivers. Perhaps tiny retro-reflectors sewn to our clothing will help robot LiDARs see us. Can we add codes to erratic, unpredictable things like children andd pets? Pedestrians and bicycles eliminate any possibility that the robots can operate on their own right of way.

Who is responsible if the robot car you are in causes a serious accident? If the robot car manufacturer is responsible, you will not be permitted to own or maintain the car. This is a very different eco-system from what we have now, which is not necessarily a bad thing. Personal automobiles spend about 95% (quick guesstimate on my part) parked. This is not a good use of thousands of dollars of capital.

--

JHG

As noted in the article, this was inevitable. We do not yet know the cause. It raises questions.

It is claimed that 95% of accidents are caused by driver error. Are accidents spread fairly evenly across the driver community, or are a few drivers responsible for most accidents? If the latter is true, it creates the possibility that there is a large group of human drivers who are better than a robot can ever be. If you see a pedestrian or cyclist moving erratically along the side of your road, do you slow to pass them? I am very cautious when I pass a stopped bus because I cannot see what is going on in front. We can see patterns, and anticipate outcomes.

Are we all going to have to be taught how to behave when approached by a robot car. Bright clothing at night helps human drivers. Perhaps tiny retro-reflectors sewn to our clothing will help robot LiDARs see us. Can we add codes to erratic, unpredictable things like children andd pets? Pedestrians and bicycles eliminate any possibility that the robots can operate on their own right of way.

Who is responsible if the robot car you are in causes a serious accident? If the robot car manufacturer is responsible, you will not be permitted to own or maintain the car. This is a very different eco-system from what we have now, which is not necessarily a bad thing. Personal automobiles spend about 95% (quick guesstimate on my part) parked. This is not a good use of thousands of dollars of capital.

--

JHG